Introduction

Generative AI application design & development

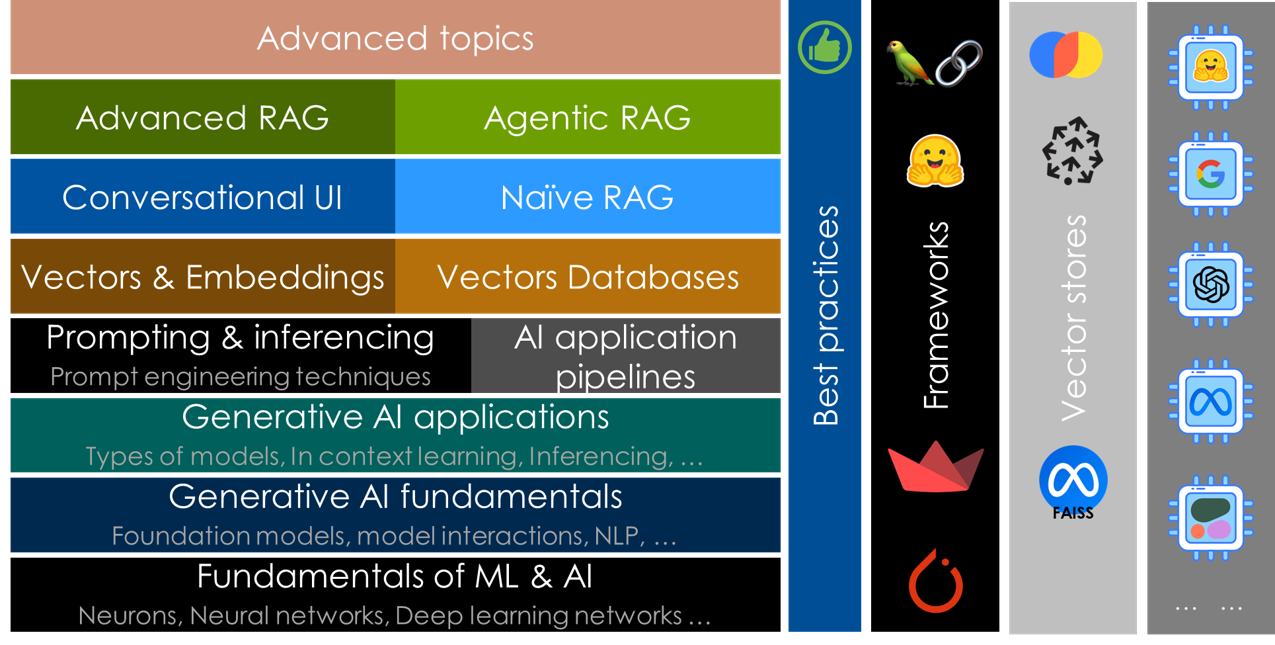

This comprehensive course on Generative AI Application Development offers a deep dive into the fundamentals and advanced applications of Generative AI technologies, focusing on the use of cutting-edge models and tools. The course begins by introducing core concepts in artificial intelligence, machine learning, and neural networks, with practical exercises on setting up neural networks and working with platforms like Hugging Face.

It explores natural language processing tasks, such as natural language understanding and generation, providing learners with hands-on experience in building and experimenting with AI models. As the course progresses, learners are introduced to advanced techniques, such as inference control parameters and in-context learning, allowing for a deeper understanding of model behavior and optimization.

Moving into more specialized areas, the course covers topics such as vector embeddings, search algorithms, and large language models (LLMs). Learners will gain experience in utilizing tools like LangChain, StreamLit and Hugging Face for both simple and complex AI tasks, including building conversational UIs, implementing advanced retrieval-augmented generation techniques, and managing datasets for training and testing.

The later modules focus on sophisticated applications such as agent-based RAG, where learners build AI agents that can interact with tools and manage multi-step reasoning tasks. By the end of the course, participants will be equipped with the knowledge and practical skills to create and optimize AI-driven applications, from basic neural networks to advanced retrieval systems and AI agents.

Modules

1. Generative AI: Fundamentals

The “Generative AI: Fundamentals” section introduces key lessons on the building blocks of AI and Generative AI.

Section starts with an overview of AI, ML, and Neural Networks, followed by an in-depth look at neurons and their role in deep learning. Hands-on exercises, like experimenting with neural networks and setting up access to Google Gemini models, reinforce these concepts.

The section also introduces platforms like Hugging Face, guiding learners through its features, community, and practical use of AI models. Additionally, it covers Natural Language Processing (NLP), focusing on how Large Language Models (LLMs) handle tasks related to Natural Language Understanding (NLU) and Natural Language Generation (NLG). Quizzes are included throughout to assess understanding of key concepts.

2. Generative AI applications

The Generative AI Applications section explores key concepts and practical techniques for working with AI models.

Section begins by explaining how models are named, providing insight into the structure and capabilities of different models. It then delves into various model types, including instruct, embedding, and chat models, highlighting their unique functions. The section also covers core NLP tasks like next word prediction and fill-mask, followed by detailed lessons on inference control parameters, such as randomness, diversity, and output length controls, which allow for precise tuning of model behavior. Hands-on exercises and quizzes reinforce these concepts, while an introduction to In-Context Learning reveals how models can learn from examples, simulating human learning processes.

3. Hugging Face Models : Fundamentals

The Hugging Face Models: Fundamentals section introduces key tools and workflows for working with Hugging Face models.

Section begins with a hands-on exercise on installing and using the Hugging Face Transformers library in Python, followed by an exploration of task pipelines and pipeline classes. The section also covers using the Hugging Face Hub to interact with model endpoints and manage repositories. Practical exercises include a summarization task using Hugging Face models, allowing learners to experiment with abstractive and extractive summarization. Finally, the section dives into using the Hugging Face CLI for managing models and caching, ensuring efficient workflows. Quizzes throughout help solidify knowledge.

4. Hugging Face Models : Advanced

The Hugging Face Advanced section delves into the deeper aspects of working with Hugging Face models.

Section starts with an exploration of tensors, the multi-dimensional arrays fundamental to neural networks, and how they are processed within the pipeline classes. The section then covers model configuration classes to understand model architectures and parameters. It also explains the role of tokenizers and demonstrates their use through Hugging Face tokenizer classes. The concept of logits and their application in task-specific classes is examined, followed by an introduction to auto model classes for flexible model handling. The section concludes with a quiz to test your knowledge and an exercise focused on building a question-answering system, applying the learned concepts in a practical scenario.

5. LLM Knowledge and Inferencing

This section provides a comprehensive overview of techniques to enhance the capabilities of large language models (LLMs).

It covers key challenges faced by LLMs and introduces strategies to address them, such as prompt engineering, model grounding and conditioning, and transfer learning. The section also delves into various prompting techniques, including few-shot, zero-shot, chain of thought, and self-consistency, to improve LLM responses. Additionally, the section explores the tree of thoughts technique for solving reasoning and logical problems. Overall, the section equips learners with the knowledge and skills to effectively utilize and optimize LLMs for various tasks.

6. Langchain : Prompts, Chains & LCEL

This section provides a comprehensive overview of LangChain, a powerful framework for building and managing LLM applications.

Section covers key concepts such as prompt templates, few-shot prompt templates, prompt model specificity, LLM invocation, streaming responses, batch jobs, and Fake LLMs. Additionally, the section introduces LangChain Execution Language (LCEL) and its essential Runnable classes for constructing complex LLM chains. By the end of this section, learners will have a solid understanding of LangChain’s core components and be able to effectively use them to build various LLM-based applications.

7. Handling structured responses

This section focuses on the challenges and techniques associated with obtaining structured responses from large language models (LLMs).

Section starts by comparing different data formats and highlighting the importance of structured outputs. The section then introduces LangChain output parsers as a valuable tool for extracting structured information from LLM responses. Through hands-on exercises, learners will practice using specific output parsers like EnumOutputParser and PydanticOutputParser. A comprehensive project, the creative writing workbench, is included to demonstrate the practical application of these concepts. Finally, the section covers strategies for handling parsing errors, providing learners with essential knowledge for building robust LLM applications that produce structured outputs.

8. Datasets for Training, and Testing

This section provides a comprehensive overview of the essential role of datasets in the development and training of large language models (LLMs).

Lessons in this section, explores the nature of data used for pre-training LLMs, the various sources of such data, and the processes involved in creating datasets. Additionally, the section introduces the HuggingFace dataset library and its capabilities, allowing learners to access and work with real-world datasets used for LLM training and testing. Through hands-on exercises, learners will gain practical experience in using the dataset library, accessing data from Hugging Face, and creating and publishing their own datasets. This section equips learners with the necessary knowledge and skills to effectively select, prepare, and manage datasets for LLM development.

9. Vectors & Embeddings

This section provides a deep dive into key concepts related to embeddings, search algorithms, and transformer architecture.

Section starts with an introduction to contextual understanding and foundational elements of the Transformer architecture, including encoder and decoder models. The section explores vectors, vector spaces, and how embeddings are generated by large language models (LLMs), followed by methods for measuring semantic similarity.

Advanced topics include working with Sentence-BERT (SBERT), building classification and paraphrase mining tasks, and utilizing the LangChain library for embeddings. The section covers various search techniques such as lexical, semantic, and kNN search, and introduces optimization metrics like Recall, QPS, and Latency. It also provides hands-on exercises to build a movie recommendation engine and work with search algorithms, including FAISS, LSH, IVF, PQ, and HNSW. The section concludes with lessons on benchmarking ANN algorithms for similarity search.

10. Vector Databases

This section provides a comprehensive overview of key concepts related to vector database systems.

It begins by addressing the challenges faced when using in-memory semantic search libraries, focusing on scalability and performance. The section introduces various vector databases available today, offering guidance on selecting the right database for different workloads.

Hands-on exercises allow learners to work with ChromaDB and integrate custom embedding models for enhanced vector searches. Key search techniques like chunking, symmetric and asymmetric searches are explored in depth. Learners will also gain insights into LangChain’s document loaders, text splitters for chunking, and retrievers for extracting relevant data. Advanced topics such as search scores and Maximal Marginal Relevancy (MMR) are covered, with practical examples. The section concludes with a project on implementing the Pinecone vector database and a quiz to test knowledge of vector databases and search optimization techniques.

11. Conversation User Interface

The section “Conversation User Interface” provides a comprehensive overview of building conversational user interfaces (chatbots) using the Streamlit framework and LangChain library.

It covers key topics such as the fundamentals of conversational UIs, the differences between single-turn and multi-turn conversations, and the essential elements of the Streamlit framework. Additionally, the section explores the importance of conversation memory for maintaining context in chatbot interactions and introduces LangChain conversation memory classes for managing conversation history. Through hands-on exercises, learners will gain practical experience in building interactive chatbots using Streamlit and incorporating conversation memory capabilities. Finally, a project-based exercise allows learners to apply their knowledge to create a real-world PDF document summarization application.

12. Advanced Retrieval Augmented Generation

The section “Advanced Retrieval Augmented Generation (RAG)” delves into the intricacies of retrieval-based techniques for enhancing LLM performance.

Section starts by introducing the concept of RAG and its benefits, followed by an exploration of LangChain’s chain creation functions for building RAG pipelines. The section then discusses challenges associated with conversational RAG and demonstrates a common issue in such scenarios. To address these challenges, learners will build a smart retriever using LangChain utility functions. The section also introduces advanced retrieval patterns like Multi Query Retriever (MQR), Parent Document Retriever (PDR), and Multi Vector Retriever (MVR), providing detailed explanations and code examples for each. Additionally, learners will explore techniques like ranking, sparse, dense, and ensemble retrievers, as well as Long Context Reorder (LCR) and contextual compression. By the end of this section, learners will have a comprehensive understanding of advanced retrieval techniques and their applications in RAG, enabling them to build more effective and sophisticated LLM-based applications.

13. Agentic RAG

The “Agentic RAG” section focuses on building and understanding AI agents, tools, and the concept of Agentic Retrieval-Augmented Generation (RAG).

It begins with an introduction to agents, their interaction with tools and toolkits, and the fundamentals of Agentic RAG. Hands-on exercises allow learners to build both single-step and multi-step agents, exploring their internal workings, including creating an agent without LangChain to better understand the mechanics.

The section also covers LangChain tools, file management toolkits, and utilities for building agentic solutions. Advanced topics include the ReAct framework for multi-step agents, which enhances an agent’s reasoning capabilities. Learners will create a question-answering ReACT agent using external search tools like Tavily. The section wraps up with quizzes and exercises that apply these lessons, helping learners develop practical experience with Agentic RAG and LangChain-based solutions.