Exercise#1 HF LLM Playground

Objective

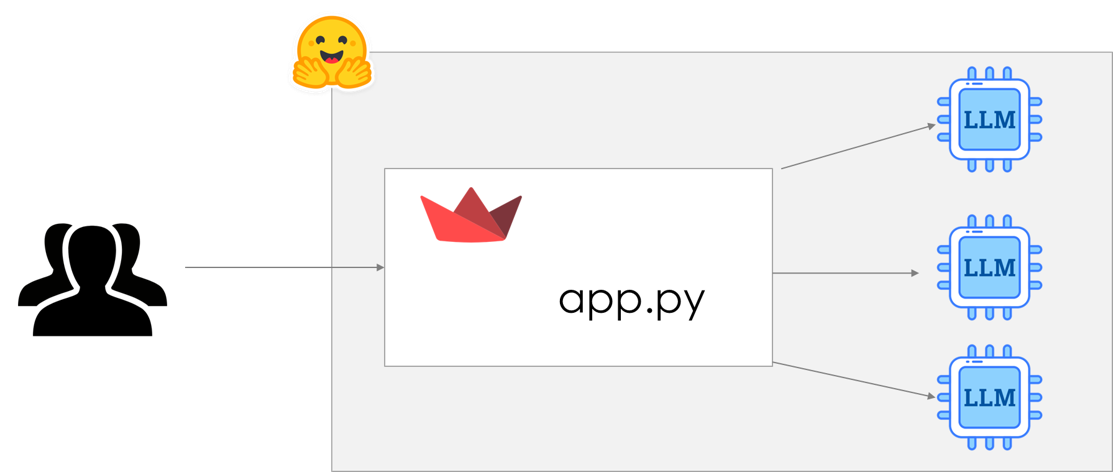

Build a HuggingFace playground application.

- User selects the LLM

- User adjusts the model parameters (optional)

- User provide a query

- Selected model is invoked

- Result is shown to the user

Checkout the final application on HuggingFace space acloudfan/HF-Playground

Before proceeding install the Streamlit Python package.

pip install streamlit

Part-1 Setup Streamlit script

Follow the instructions below to create the application.

1. Create a file app.py

Import the required packages, including Streamlit.

import streamlit as st

from dotenv import load_dotenv

import os

from langchain_community.llms import HuggingFaceEndpoint

2. Setup the HuggingFace key in env variable

Since you will be using the HuggingFace Infrence endpoint, setup the environment variable. You can do it in multiple ways. Following code assumes that the keys are available in a keys file.

try:

load_dotenv('PROVIDE PATH TO YOUR ENV. file')

except:

print("Environment file not found !! MUST find the env var HUGGINGFACEHUB_API_TOKEN to work.")

3. Setup the application title

# Title

st.title('HuggingFace LLM playground')

Launch the application for local development. On a terminal:

- Change directory to where you have the app.py

- Run the following command to launch

streamlit run app.py

4. Setup the models Selectbox in sidebar

Setup the list of models that can be invoked in the playground.

# Models that can be used

# Add/remove models from this list as needed

models = [

'mistralai/Mistral-7B-Instruct-v0.2',

'google/flan-t5-xxl',

'tiiuae/falcon-40b-instruct',

]

Now create the Selectbox in the sidebar. The selected model name will be available in the variable model_id

# Selected model in model_id

model_id = st.sidebar.selectbox(

'Select model',

options=tuple(models)

)

5. Setup variables in session state

Watch the following video before proceeding with the code.

Streamlit API : st.session_state

We will manage the LLM response in a Streamlit session state key named model-response.

if 'model-response' not in st.session_state:

st.session_state['model-response'] = '<provide query & click on invoke>'

6. Setup text area for model response

Streamlit API : st.text_area Create the text box for showing the model response.

# draw the box for model response

st.text_area('Response', value = st.session_state['model-response'], height=400)

7. Setup text area for user query

# draw the box for query

query = st.text_area('Query', placeholder='provide query & invoke', value='who was the president of the USA in 2023?')

8. Setup model parameters as sliders in the sidebar

# Temperature

temperature = st.sidebar.slider(

label='Temperature',

min_value=0.01,

max_value=1.0

)

# Top p

top_p = st.sidebar.slider(

label='Top p',

min_value=0.01,

max_value=1.0,

value=0.01

)

# Top k

top_k = st.sidebar.slider(

label='Top k',

min_value=1,

max_value=50,

value=10

)

repetition_penalty = st.sidebar.slider(

label='Repeatition penalty',

min_value=0.0,

max_value=5.0,

value=1.0

)

# Maximum token

max_tokens = st.sidebar.number_input(

label='Max tokens',

value=50

)

9. Utility functions

# Function to create the LLM

def get_llm(model_id):

return HuggingFaceEndpoint(

repo_id=model_id,

temperature=temperature,

top_k = top_k,

top_p = top_p,

repetition_penalty = repetition_penalty,

max_new_tokens=max_tokens

)

# Function for invoking the LLM

def invoke():

llm_hf = get_llm(model_id)

# Show spinner, while we are waiting for the response

with st.spinner('Invoking LLM ... '):

st.session_state['model-response'] = llm_hf.invoke(query)

10. Create a button for invoking the LLM

st.button("Invoke", on_click=invoke)

You should now have a working playground !!!!

Part-2 Deploy the application on HuggingFace spaces

1. Create the dependency file : requirements.txt

requirements.txt

python-dotenv

langchain

2. Log into HuggingFace portal

3. Create a HuggingFace space

- Provide a name for the space

- Select the SDK/Framework Streamlit

- Use default hardware

4. Create a Secret for holding HuggingFace API Key

- Click on Settings

- Scroll to variables and Secrets

- Click on Create new secret

- Copy/paste name of the secret - add your API key (read only is good)

HUGGINGFACEHUB_API_TOKEN

5. Upload the two files to spaces

Click on Files and then Add files option.

- app.py

- requirements.txt

Solution

The solution to the exercise is available in the project repository.