Exercise#2 Chatbot

Objective

Learn to build a multi-turn conversational interface (Chatbot).

- Use the Cohere command model

- Manage the conversation context in Streamlit session_state

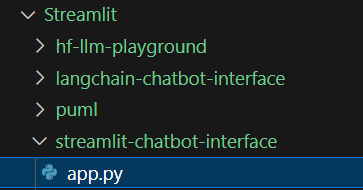

Start by create Python script file name it: app.py. If you would like to host it on HuggingFace space then create the requirements.txt file as well.

1. Import the libraries

import streamlit as st

import time

import os

from dataclasses import dataclass

from dotenv import load_dotenv

from langchain_community.llms import Cohere

Before proceeding checkout the Cohere API for LLM creation.

2. Setup datastructure for holding the messages

- Create a Python class with just the attributes.

- Define the variables to hold the roles

# Define a Message class for holding the query/response

@dataclass

class Message:

role: str # identifies the actor (system, user or human, assistant or ai)

payload: str # instructions, query, response

# Streamlit knows about the common roles as a result, it is able to display the icons

USER = "user" # or human

ASSISTANT = "assistant" # or ai

SYSTEM = "system"

3. Initialize the datastructure to hold the context

- Each message will be converted to an instance of Message

- All message objects will be held in an array

- The Streamlit session object will hold the array with a key = ‘MESSAGES’

- Initialize the array with an instruction (message), with role=system

MESSAGES='messages'

if MESSAGES not in st.session_state:

system_message = Message(role=SYSTEM, payload="you are a polite assistant named 'Ruby'.")

st.session_state[MESSAGES] = [system_message]

4. Setup the title & input text element for the Cohere API key

- API key for Cohere is needed

- User will need to provide this key

# Set the title

st.title("Multi-Turn conversation interface !!!")

# Input text for getting the API key

cohere_api_key = st.sidebar.text_input('Cohere API key',placeholder='copy & paste your API key')

For local development, lets launch the interface. As the script will be updated we will see the changes reflected in the browser.

- Open the terminal

- Change to folder that has the script streamlit-chat-context-in-session.py

streamlit run streamlit-chat-context-in-session.py

5. Define utility functions to invoke the LLM

# Create an instance of the LLM

@st.cache_resource

def get_llm():

return Cohere(model="command", cohere_api_key=cohere_api_key)

# Create the context by concatenating the messages

def get_chat_context():

context = ''

for msg in st.session_state[MESSAGES]:

context = context + '\n\n' + msg.role + ':' + msg.payload

return context

# Generate the response and return

def get_llm_response(prompt):

llm = get_llm()

# Show spinner, while we are waiting for the response

with st.spinner('Invoking LLM ... '):

# get the context

chat_context = get_chat_context()

# Prefix the query with context

query_payload = chat_context +'\n\n Question: ' + prompt

response = llm.invoke(query_payload)

return response

6. Write the messages to chat_message container

- Creates the chat message container with text elements

- Streamlit uses the chat_message(name) to paint an icon for the role

for msg in st.session_state[MESSAGES]:

st.chat_message(msg.role).write(msg.payload)

7. Create the chat_input element to get the user query

# Interface for user input

prompt = st.chat_input(placeholder='Your input here')

8. Process the query received from user

- Check if the prompt is not null (or empty) before processing

- Add the query to the messages array in the session

- Invoke the LLM

- Convert the received response to a message object

- Add the message object to the message array in the session state

- Append the response as the chat_message

if prompt:

# create user message and add to end of messages in the session

user_message = Message(role=USER, payload=prompt)

st.session_state[MESSAGES].append(user_message)

# Write the user prompt as chat message

st.chat_message(USER).write(prompt)

# Invoke the LLM

response = get_llm_response(prompt)

# Create message object representing the response

assistant_message = Message(role=ASSISTANT, payload=response)

# Add the response message to the mesages array in the session

st.session_state[MESSAGES].append(assistant_message)

# Write the response as chat_message

st.chat_message(ASSISTANT).write(response)

9. Write out the current content of the context

# Separator

st.divider()

st.subheader('st.session_state[MESSAGES] dump:')

# Print the state of the buffer

for msg in st.session_state[MESSAGES]:

st.text(msg.role + ' : ' + msg.payload)

Solution

The solution script is available in the project repository.