Exercise#3 LangChain memory

Objective

Learn to use the LangChain ConversationSummaryMemory class.

- Decide on LLM used for Chatbot responses

- Decide on LLM used for summarizing the history

Checkout final bot on HuggingFace space : acloudfan/chatbot-langchain-memory

Hints

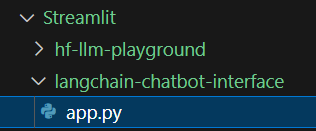

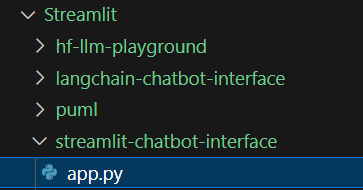

1. Make a copy of the Streamlit chatbot

- Create a folder (langchain-chatbot-interface)

- Copy Streamlt/streamlit-chatbot-interface/app.py to new folder

- Make changes to the app.py to accopalish the task

2. Memory class instance

- Checkout the API documentation for ConversationSummaryMemory

- Instead of using Streamlit session_state, you will use the ConversationSummaryClass

- Create a function to get the summarization LLM

def get_summarization_llm():

model = 'gpt-3.5-turbo-0125'

return ChatOpenAI(model=model, openai_api_key=openai_api_key)

- Create instance of the ConversationSummaryMemory class and add it to StreamLit session state.

if 'MEMORY' not in st.session_state:

memory = ConversationSummaryMemory(

llm = get_summarization_llm(),

human_prefix='user',

ai_prefix = 'assistant',

return_messages=True

)

# add to the session

st.session_state['MEMORY'] = memory

3. Create the ConversationChain

- Checkout the API documentation for ConversationChain

def get_llm_chain():

memory = st.session_state['MEMORY']

conversation = ConversationChain(

llm=get_llm(),

memory=memory

)

return conversation

4. Dump the summary conversation history

- Show the conversation history on the interface

st.divider()

st.subheader('Context/Summary:')

# Print the state of the buffer

st.session_state['MEMORY'].buffer

5. To stop Streamlit from processing

Solution

Solution uses the OpenAI gpt3.5-turbo model for both the chatbot responses and for context summarization. You may replace them with any model of your choice. The solution script is available in the project repository.