Exercise#1 Retrieval History

Objective

Use the LangChain create_history_aware_retriever to address the issue related to loss of context in query to retrieve the context. You are already given the boiler plate code for setting up the vector database and LLM. Your task is to implement the history aware retriever.

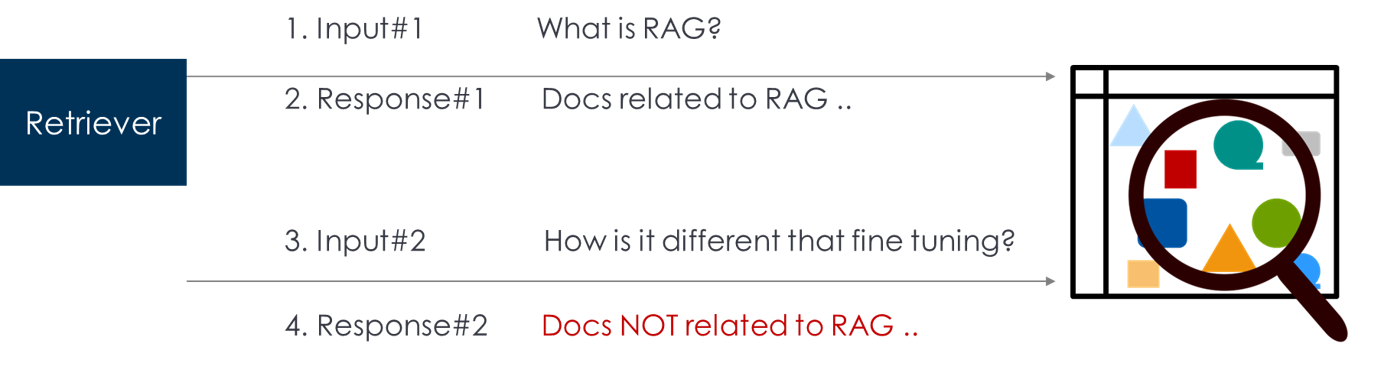

Issue

In multi-turn conversation chatbots, input is passed to a retriever to fetch the context. Sometimes the subsequent input may not have the context for retrieval of appropriate documents. This leads to a loss of context that has a direct impact on the quality of responses.

The scenario is depicted in the illustration below. In the second input, the retriver does not have access to the earlier input as a result the word “it” gets mis-interpretted.

Setup LLM & Vector database

Create a new notebook and copy/paste the following code.

Location and name of the notebook: gen-ai-app-dev-template/RAG/conversational-RAG-history.ipynb

1 Setup LLM

Use the utility functions to create the LLM. Copy paste the facts below.

You MUST adjust the (1) path to the API key file (2) path for the util file depending on the location of notebook.

from dotenv import load_dotenv

import sys

import json

from langchain.prompts import PromptTemplate

# Load the file that contains the API keys - OPENAI_API_KEY

load_dotenv('C:\\Users\\raj\\.jupyter\\.env')

# setting path

sys.path.append('../')

from utils.create_chat_llm import create_gpt_chat_llm, create_cohere_chat_llm

# Try with GPT

llm = create_gpt_chat_llm()

2. Setup Vector database (ChromaDB)

# 1. Load a couple of Blogs

from langchain_community.document_loaders import WebBaseLoader

# Sample blogs on RAG that we will add to vector database

url1 = "https://cloud.google.com/blog/products/ai-machine-learning/to-tune-or-not-to-tune-a-guide-to-leveraging-your-data-with-llms"

url2 = "https://aws.amazon.com/blogs/aws/build-rag-and-agent-based-generative-ai-applications-with-new-amazon-titan-text-premier-model-available-in-amazon-bedrock/"

loader = WebBaseLoader(

web_paths=(url1,url2)

)

docs = loader.load()

# 2. Chunk the blogs

from langchain_text_splitters import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(chunk_size=300, chunk_overlap=20)

chunked_documents = text_splitter.split_documents(docs)

# 3. Add chunks to the ChromaDB

from langchain_community.vectorstores import Chroma

# from langchain_chroma import Chroma

from langchain_community.embeddings.sentence_transformer import (

SentenceTransformerEmbeddings,

)

# load it into Chroma using default embedding all-MiniLM-L6-v2

collection_name = 'sample-blog'

collection_metadata = {'embedding': 'all-MiniLM-L6-v2'}

embedding_function = SentenceTransformerEmbeddings(model_name="all-MiniLM-L6-v2")

vector_store = Chroma(collection_name=collection_name, collection_metadata=collection_metadata, embedding_function=embedding_function)

vector_store.add_documents(chunked_documents)

retriever = vector_store.as_retriever()

Task

-

Read through the documentation for the LangChain conveneince function create_history_aware_retriver

-

Use the create_history_aware_retriver function to create a RAG chain. Use the prompts below:

from langchain_core.prompts import ChatPromptTemplate

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain.chains import create_retrieval_chain

from langchain.chains import create_history_aware_retriever

from langchain_core.prompts import MessagesPlaceholder

# This is the prompt used for generating the input/query from chat history and user input

contextualize_q_system_prompt = (

"Given a chat history and the latest user question "

"which might reference context in the chat history, "

"formulate a standalone question which can be understood "

"without the chat history. Do NOT answer the question, "

"just reformulate it if needed and otherwise return it as is."

)

contextualize_q_prompt = ChatPromptTemplate.from_messages(

[

("system", contextualize_q_system_prompt),

MessagesPlaceholder("chat_history"),

("human", "{input}"),

]

)

- Test your code to verify if issue is resolved

Solution

The solution to the exercise is available in the following notebook.

Open in Google Colab

- You need to install the packages listed in the notebook