Exercise#1 Single step agent

Objective

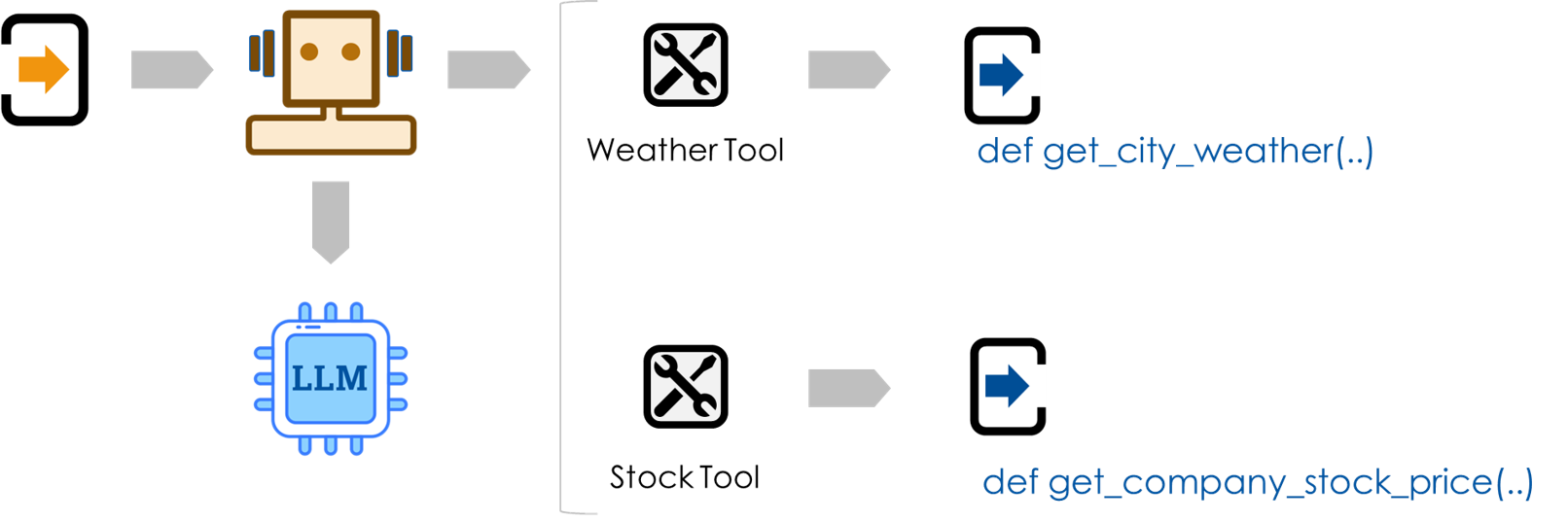

Learn how agents use LLMs and tools to carry out given task or solve a problem.

The code below uses GPT3.5-turbo model. In case of a run away, you may end up paying for unneccesary runs.

The code does not use the Langchain Tool or Agent classes as the intent is to show you the internal working of an agent.

Dummy functions

Local functions will be used as tools. For testing you will use 2 local functions with hardcoded information that is returned by these tools.

- Weather tool : returns the weather for given city

- Stock tool : returns the last known stock price for the given company

Agent logic

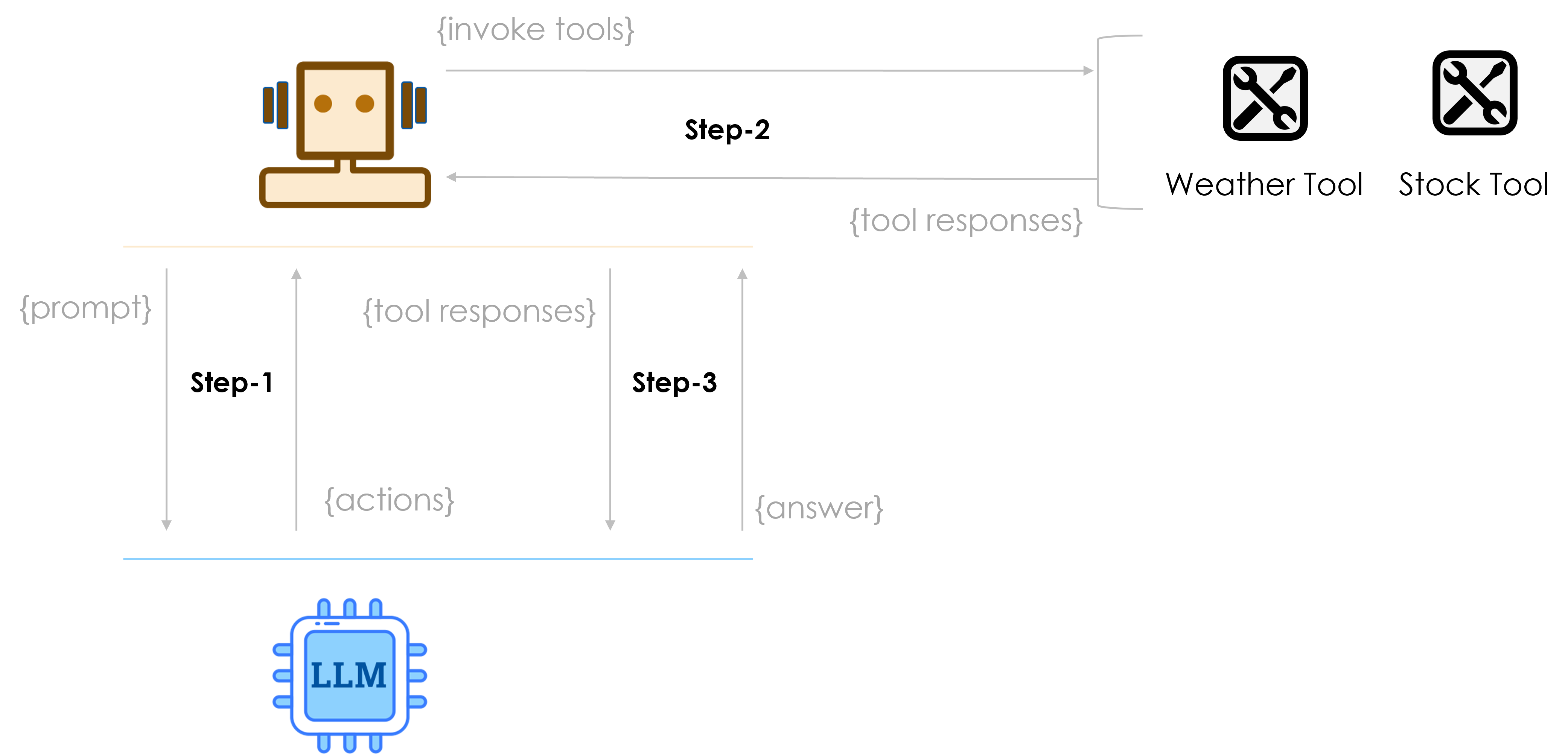

Agent is implemented to run three steps:

Step-1 Generate plan with actions

Step-2 Invoke tools

Step-3 Send response to LLM

Steps

Setup LLM

Note You MUST change the location of the API key file.

from dotenv import load_dotenv

import sys

import json

from langchain.prompts import PromptTemplate

# Load the file that contains the API keys - OPENAI_API_KEY

load_dotenv('C:\\Users\\raj\\.jupyter\\.env')

# setting path

sys.path.append('../')

from utils.create_chat_llm import create_gpt_chat_llm, create_cohere_chat_llm, create_anthropic_chat_llm, create_hugging_face_chat_llm

# Try with GPT

llm = create_gpt_chat_llm({"temperature":0.1})

1. Setup the prompt

- Review the prompt below to learn how LLM learns about the available tools

# Tempplate

template = """

You are a helpful assistant capable of answering questions on various topics.

You must not use your internal knowledge or information to answer questions.

Instructions:

Think step-by-step to create a plan.

Use only the following available tools to find information.

Tools Available:

{tools}

Guidelines for Responses:

Format 1: If the question cannot be answered with the available tools, use this format:

{{"answer": "No appropriate tool available"}}

Format 2: If you need to run tools to obtain the information, use this format:

{{"actions": [{{ "action" : tool name, "arguments" : dictionary of argument values}}}}]

Format 3: If you can answer the question using the responses from the tools, use this format:

{{"answer": "your response to the question", "explanation": "provide your explanation here"}}

Avoid any preamble; respond directly using one of the specified JSON formats.

Question:

{question}

Tool Responses:

{tool_responses}

Your Response:

"""

prompt = PromptTemplate(

template = template,

input_variables = ['tools', 'question', 'tool_responses']

)

2. Setup the tools

- Create dummy tool functions for stock price & city weather

- Functions are hardcoded to respond for fixed set of cities/stocks

- Setup tool map = dictionary of functions with function name as the key

## Tool 1 for stocks

def company_stock_price(stock_symbol: str) -> float:

if stock_symbol.upper()=='AAPL':

return {"price": 192.32}

elif stock_symbol.upper()=='MSFT':

return {"price": 415.60}

elif stock_symbol.upper()=='AMZN':

return {"price": 183.60}

else:

return {"price": "unknown"}

stock_tool_description = {

"name" : "company_stock_price",

"description": "This tool returns the last known stock price for a company based on its ticker symbol",

"arguments": [

{"stock_symbol" : "stock ticker symbol for the company"}

],

"response": "last known stock price"

}

## Tool 2 for city weather

def city_weather(city: str) -> int:

if city.lower() == "new york":

return {"temperature": 68, "forecast": "rain"}

elif city.lower() == "paris":

return {"temperature": 73, "forecast": "sunny"}

elif city.lower() == "london":

return {"temperature": 82, "forecast": "cloudy"}

else:

return {"temperature": "unknown"}

city_weather_tool_description = {

"name" : "city_weather",

"description": "This tool returns the current temperature and forecast for the given city",

"arguments": [

{"city" : "name of the city"}

],

"response": "current temperature & forecast"

}

# Maintain the tools in a map for invocation by th eagent

tools = [stock_tool_description, city_weather_tool_description]

tools_map = {

'company_stock_price': company_stock_price,

'city_weather' : city_weather

}

3. Agent code

- Create a function for invoking the agent

def invoke_agent(question):

# Setup the prompt. Since no tool has been invoked set action_response as blank

query = prompt.format(tools=tools, question=question, tool_responses="")

# STEP-1 Invoke LLM for a plan i.e., tools to execute

# ===================================================

# Invoke LLM to get the tools to be run

# The response consist of tools that LLM requires to be executed

response = llm.invoke(query)

# Convert response to JSON object. The response is of type AIMessage

response_json = json.loads(response.content)

# print the response

print("STEP-1:", response_json, "\n")

# STEP-2 Invoke the tool(s) suggested by LLM

# ===========================================

# LLM may respond with an answer

# It may happen if LLM determines that no tool is available for responding to the question

action_responses=[]

if "answer" in response_json:

# If the answer is already there

return {"answer" : response_json["answer"]}

elif "actions" in response_json:

# If the LLM has suggested tools to be executed, execute the tools

action_responses = invoke_tools(response_json)

# Print the tool responses

print("STEP-2", " Agent tool invocation responses :", action_responses, "\n")

# STEP-3 Invoke LLM to generate final response

# =============================================

# Now send the action responses to LLM for generating the answer

query = prompt.format(tools=tools, question=question,tool_responses=action_responses)

response = llm.invoke(query)

# print the response

print("STEP-3:", response_json, "\n")

# Convert response to JSON object

response_json = json.loads(response.content)

# Extract the answer from the response

if "answer" in response_json:

return response_json["answer"]

else:

return ("Can't generate as there is no response from the tool!!!")

- Create a utility function for invoking the tools

# Utility function to process the actions receieved from the LLM

# Responses from the tools are expected to be in JSON format

def invoke_tools(response):

action_responses = []

if len(response["actions"]) == 0:

print('question cannot be answered as there is no tool to use !!!')

exit

else:

for action in response["actions"]:

# Get the function pointer from the map

action_function = tools_map[action["action"]]

# Invoke the tool/function with the arguments as suggested by the LLM

action_invoke_result = action_function(**action["arguments"])

action["response"] = action_invoke_result

# Add the response to the action attribute

action_responses.append(action)

# Return the response

return action_responses

Test scanario#1

- Simple test that uses 1 tool

question = "Which of these cities is hotter, Paris or London"

# question = "I am visting paris, should i carry an umbrella?"

response = invoke_agent(question)

print("Final response::",response)

Response:

STEP-1: {'actions': [{'action': 'city_weather', 'arguments': {'city': 'Paris'}}, {'action': 'city_weather', 'arguments': {'city': 'London'}}]}

STEP-2 Agent tool invocation responses : [{'action': 'city_weather', 'arguments': {'city': 'Paris'}, 'response': {'temperature': 73, 'forecast': 'sunny'}}, {'action': 'city_weather', 'arguments': {'city': 'London'}, 'response': {'temperature': 82, 'forecast': 'cloudy'}}]

STEP-3: {'actions': [{'action': 'city_weather', 'arguments': {'city': 'Paris'}, 'response': {'temperature': 73, 'forecast': 'sunny'}}, {'action': 'city_weather', 'arguments': {'city': 'London'}, 'response': {'temperature': 82, 'forecast': 'cloudy'}}]}

Final response:: London is hotter than Paris

Test scenario#2

- Simple test to understand the behavior when no appropriate tool is available

question = "search the web for articles on 'large language models'"

response = invoke_agent(question)

print("Final response::",response)

Response:

STEP-1: {'answer': 'No appropriate tool available'}

Final response:: {'answer': 'No appropriate tool available'}

Test sceanrio#3

- A complex scenario requiring use of more than one tool

question = """

I am interested in investing in one of these stocks: AAPL, MSFT, or AMZN.

Decision Criteria:

Sunny Weather: Choose the stock with the lowest price.

Raining Weather: Choose the stock with the highest price.

Cloudy Weather: Do not buy any stock.

Location:

I am currently in New York.

Question:

Based on the current weather in New York and the stock prices, which stock should I invest in?

"""

response = invoke_agent(question)

print("Final response:",response)

STEP-1: {'actions': [{'action': 'city_weather', 'arguments': {'city': 'New York'}}, {'action': 'company_stock_price', 'arguments': {'stock_symbol': 'AAPL'}}, {'action': 'company_stock_price', 'arguments': {'stock_symbol': 'MSFT'}}, {'action': 'company_stock_price', 'arguments': {'stock_symbol': 'AMZN'}}]}

STEP-2 Agent tool invocation responses : [{'action': 'city_weather', 'arguments': {'city': 'New York'}, 'response': {'temperature': 68, 'forecast': 'rain'}}, {'action': 'company_stock_price', 'arguments': {'stock_symbol': 'AAPL'}, 'response': {'price': 192.32}}, {'action': 'company_stock_price', 'arguments': {'stock_symbol': 'MSFT'}, 'response': {'price': 415.6}}, {'action': 'company_stock_price', 'arguments': {'stock_symbol': 'AMZN'}, 'response': {'price': 183.6}}]

STEP-3: {'actions': [{'action': 'city_weather', 'arguments': {'city': 'New York'}, 'response': {'temperature': 68, 'forecast': 'rain'}}, {'action': 'company_stock_price', 'arguments': {'stock_symbol': 'AAPL'}, 'response': {'price': 192.32}}, {'action': 'company_stock_price', 'arguments': {'stock_symbol': 'MSFT'}, 'response': {'price': 415.6}}, {'action': 'company_stock_price', 'arguments': {'stock_symbol': 'AMZN'}, 'response': {'price': 183.6}}]}

Final response: AAPL

Test scenario#4

- Test for a scenario when the response from tool is insufficient to generate an output

question = "I have only $200, can i buy GOOG stock?"

response = invoke_agent(question)

print("Final response:",response)

Response:

STEP-1: {'actions': [{'action': 'company_stock_price', 'arguments': {'stock_symbol': 'GOOG'}}]}

STEP-2 Agent tool invocation responses : [{'action': 'company_stock_price', 'arguments': {'stock_symbol': 'GOOG'}, 'response': {'price': 'unknown'}}]

STEP-3: {'actions': [{'action': 'company_stock_price', 'arguments': {'stock_symbol': 'GOOG'}, 'response': {'price': 'unknown'}}]}

Final response: Can't generate as there is no response from the tool!!!

Solution

The solution to the exercise is available in the following notebook.