Exercise#4 Create agent functions

Objective

Learn to build agentic RAG solutions with the LangChain agent creation functions and AgentExecutor class. This exercise has 2 parts to show you how to use different create functions.You will try out the:

- Part-1: Shows how to use create_structured_chat_agent

- Part-2: Shows how to use create_react_agent

Common steps for the 2 parts

Import packages

from langchain.agents import AgentExecutor, create_tool_calling_agent, create_structured_chat_agent, create_react_agent

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain_core.prompts import ChatPromptTemplate, PromptTemplate, MessagesPlaceholder

from langchain_community.agent_toolkits.load_tools import load_tools

from langchain_core.tools import tool

import warnings

warnings.filterwarnings('ignore')

Setup LLM

- You need to use chat model for this exercise

- The model you are using MUST support “Stop sequence”, otherwise you will get a runtime error from AgentExecutor

from dotenv import load_dotenv

import sys

import json

from langchain.prompts import PromptTemplate

# Load the file that contains the API keys - OPENAI_API_KEY

load_dotenv('C:\\Users\\raj\\.jupyter\\.env')

# setting path

sys.path.append('../')

from utils.create_chat_llm import create_gpt_chat_llm, create_cohere_chat_llm, create_anthropic_chat_llm, create_hugging_face_chat_llm

from utils.create_llm import create_cohere_llm, create_gpt_llm

# Try with GPT

llm = create_gpt_chat_llm({"temperature":0.1}) #, "model_kwargs":{"top_p":0.1}})

### Try out ONLY if you have tested Amazon Bedrock connectivity

### Model must support "Stop Sequence" e.g., [model_id='meta.llama3-8b-instruct-v1:0'] does not support "Stop sequence"

### Uncomment following lines of code to try out Anthropic Claude Haiku on Bedrock

# from utils.amazon_bedrock import create_bedrock_chat_model

# model_id='anthropic.claude-3-haiku-20240307-v1:0'

# llm = create_bedrock_chat_model(model_id)

Part-1

You will use the code & intstructions to build a Structured chat agent

- Use the dummy functions used in the earlier exercise (Scratch build single step agent)

- Refer to documentation for create_structured_chat_agent

1. Create the dummy functions

@tool

def company_stock_price(stock_symbol: str) -> float:

"""

Retrieve the current stock price for a given company stock symbol.

This function accepts a stock symbol and returns the current stock price for the specified company.

It supports stock symbols for the following companies:

- Apple Inc. ("AAPL")

- Microsoft Corporation ("MSFT")

- Amazon.com, Inc. ("AMZN")

If the stock symbol does not match any of the supported companies, the function returns "unknown" as the price.

Args:

stock_symbol (str): The stock symbol of the company whose stock price is requested.

Returns:

dict: A dictionary containing the stock price with the key "price".

If the stock symbol is not recognized, the value is "unknown".

"""

if stock_symbol.upper()=='AAPL':

return {"price": 192.32}

elif stock_symbol.upper()=='MSFT':

return {"price": 415.60}

elif stock_symbol.upper()=='AMZN':

return {"price": 183.60}

else:

return {"price": "unknown"}

@tool

def city_weather(city: str) -> int:

"""

Retrieve the current weather information for a given city.

This function accepts a city name and returns a dictionary containing the current temperature and weather forecast for that city.

It supports the following cities:

- New York

- Paris

- London

If the city is not recognized, the function returns "unknown" for the temperature.

Args:

city (str): The name of the city for which the weather information is requested.

Returns:

dict: A dictionary with the following keys:

- "temperature": The current temperature in Fahrenheit.

- "forecast": A brief description of the current weather forecast.

If the city is not recognized, the "temperature" key will have a value of "unknown".

"""

if city.lower() == "new york":

return {"temperature": 68, "forecast": "rain"}

elif city.lower() == "paris":

return {"temperature": 73, "forecast": "sunny"}

elif city.lower() == "london":

return {"temperature": 82, "forecast": "cloudy"}

else:

return {"temperature": "unknown"}

# create the tools array

tools = [company_stock_price, city_weather]

2. Setup the prompt

system = '''Respond to the human as helpfully and accurately as possible. You have access to the following tools:

{tools}

Use a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input).

Valid "action" values: "Final Answer" or {tool_names}

Provide only ONE action per $JSON_BLOB, as shown:

`` `

{{

"action": $TOOL_NAME,

"action_input": $INPUT

}}

`` `

Follow this format:

Question: input question to answer

Thought: consider previous and subsequent steps

Action:

`` `

$JSON_BLOB

`` `

Observation: action result

... (repeat Thought/Action/Observation N times)

Thought: I know what to respond

Action:

`` `

{{

"action": "Final Answer",

"action_input": "Final response to human"

}}

`` `

Begin! Reminder to ALWAYS respond with a valid json blob of a single action. Use tools if necessary. Respond directly if appropriate. Format is Action:`` `$JSON_BLOB`` `then Observation'''

human = '''{input}

{agent_scratchpad}

(reminder to respond in a JSON blob no matter what)'''

prompt = ChatPromptTemplate.from_messages(

[

("system", system),

MessagesPlaceholder("chat_history", optional=True),

("human", human),

]

)

3. Create the agent & agent executor

-

Checkout the documentation for create_structured_chat_agent

-

Checkout the documentation for AgentExecutor

-

Change verbosity by setting verbose to True/False

agent = create_structured_chat_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, maximum_iterations=2, verbose=True, handle_parsing_errors=True)

4. Test

- Uncomment/comment questions to try out

# question = "Which of these cities is hotter, Paris or London"

# question = "I am visting paris city, should i carry an umbrella?"

question = question = """

I am interested in investing in one of these stocks: AAPL, MSFT, or AMZN.

Decision Criteria:

Sunny Weather: Choose the stock with the lowest price.

Raining Weather: Choose the stock with the highest price.

Cloudy Weather: Do not buy any stock.

Location:

I am currently in New York.

Question:

Based on the current weather in New York and the stock prices, which stock should I invest in?

"""

# This prompt does not use history as a result - results from last call are lost and agent will go in a loop !!!

agent_executor.invoke({

"input": question

})

Part-2

- You will refer to the documentation for AgentExecutor, create_react_agent to rewrite the code from the previous exercise (#3 Multi Step Agent).

- Once you are done compare the size/complexity of the code with solution for exercise#3

- The prompt & test cases are given

1. Setup tools

- Use the Wikipedia tool

- (Optional) Add the Tavily tool or any other tool of your choice

2. Setup the prompt

The prompt must have input keys:

-

tools: contains descriptions and arguments for each tool.

-

tool_names: contains all tool names.

-

agent_scratchpad: contains previous agent actions and tool outputs as a string.

# https://smith.langchain.com/hub/hwchase17/react

template="""

Answer the following questions as best you can. You have access to the following tools:

{tools}

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: {input}

Thought:{agent_scratchpad}

"""

# Create the template

prompt = PromptTemplate.from_template(template)

3. Create the agent and the executor

Create the agent executor such that:

- maximum iterations is 3

- response should contain the intermediate steps

- should handle the parsing errors

Code it on your own :-) this is the exercise.

4. Tests

# A: The Jungle Book

# question = "Which film stars more animals, The Jungle Book or The Lone Ranger?"

# Q: Risingson is the first single from what album by Massive Attack, that was the first to be produced by Neil Davidge, along with the group?

# A: Mezzanine

question = "Risingson is the first single from what album by Massive Attack, that was the first to be produced by Neil Davidge, along with the group?"

# Q: Where is the basketball team that Mike DiNunno plays for based ?

# A: Ellesmere Port

# question = "Where is the basketball team that Mike DiNunno plays for based ?"

# Q: Which one of Ricardo Rodríguez Saá's relatives would become governor from 1983 to 2001?

# A: Adolfo Rodríguez Saá

# question="Which one of Ricardo Rodríguez Saá's relatives would become governor from 1983 to 2001?"

response = agent_executor({"input": question})

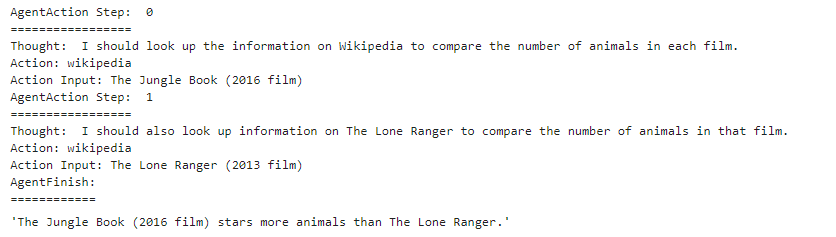

5. Print the response such that it shows the LLM’s thoughts

Example:

Hints

- Checkout the itermediate_steps in response

- AgentAction has the tool & input

Solution

The solution to the exercise is available in the following notebook.

Optional exercises

- Use a different LLM. Keep in mind you may need to adjust the prompt

- Add different or more tools

- Try out complex logical, reasoning & factual questions