Exercise#2 PyTorch : Dynamic

Objective

Learn how LLMs are quantized dynamically using the PyTorch framework. Checkout the impact on:

-

Accuracy

-

Size of model

Intent is not to teach PyTorch but to de-mystify Quantization and show its real benefits in terms of model compression without a significant loss of accuracy.

Steps

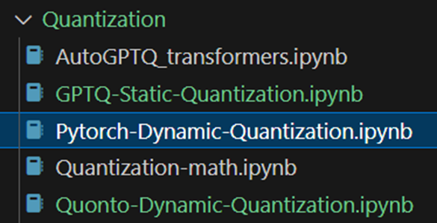

Create a new notebook under the template/Quantization/Pytorch-Dynamic-Quantization

- Load the non-quantized model and invoke it

Note: To understand how this code is working, please review the lessons in the section: Hugging Face (advanced)

import os

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load pre-trained model and tokenizer (e.g., GPT-2)

# model_name = "openai-community/gpt2"

model_name = "facebook/opt-125m"

tokenizer = AutoTokenizer.from_pretrained(model_name, clean_up_tokenization_spaces=False)

model = AutoModelForCausalLM.from_pretrained(model_name)

# Test the model before quantization

text = "Once upon a time,"

inputs = tokenizer(text, return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=10)

# Print model output

print("Original Model Output:")

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

- Apply dynamic quantization

- Quantizes the model to INT8

- Invokes the model with the same input as the original model

- Compare the output for original and quantized model - Is the accuracy good?

# Apply dynamic quantization to the model

quantized_model = torch.quantization.quantize_dynamic(

model, # the model to quantize

{torch.nn.Linear}, # layers to quantize (focusing on Linear layers)

dtype=torch.qint8

)

# Test the quantized model's output

outputs_quantized = quantized_model.generate(**inputs, max_new_tokens=10)

# Print the output

print("\nQuantized Model Output:")

print(tokenizer.decode(outputs_quantized[0], skip_special_tokens=True))

- Compare the sizes of original/quantized models

- Define a utility function to calculate the size of model on disk

- Call utility function to estimate size of original/quantized version of model

- How much saving do you see?

# Function to print and compare model sizes

# Code below serializes the model to the file system

# Note that this is just to get an idea of relatives sizes

# and not the exact memory footprints.

def print_size_of_model(model, model_name=""):

torch.save(model.state_dict(), f"{model_name}.pt")

size_mb = os.path.getsize(f'{model_name}.pt') / 1e6

print(f"\nModel size of {model_name}: {size_mb:.2f} MB")

# Compare sizes of original and quantized models

print_size_of_model(model, "Original_Model")

print_size_of_model(quantized_model, "Quantized_Model")

# Clean up saved files after checking size

os.remove("Original_Model.pt")

os.remove("Quantized_Model.pt")

- Try out a different model

Larger models need GPU (over ~1B parameters) for quantization. Use Google Colab/T4 runtime for larger models.

- Not all model quantization result in efficiencies

- Explore other quantizable model on Hugging Face and quantize e.g., “openai-community/gpt2”

- Answer the question : Can you use PyTorch for INT4 quantization? Use PyTorch data type documentation to answer this question.

Solution

Open notebook locally

Google colab

- Open the notebook in Google colab