Exercise#3 Quantize & Publish

Objective

Use the GPTQ library to statically quantize a model and then publish it to your own Hugging Face space. Here are the 3 main objectives:

-

Learn static quantization

-

Use of callibration dataset

-

Publishing models to Hugging Face

Notebook requires GPU. If you do not have a GPU on your local machine, try out this notbook on Google Colab with T4 runtime.

Steps:

-

Review the differences between static and dynamic quantization

-

Go through an overview of Auto GPTQ library

-

Open the notebook in Google colab (use link below)

-

Review code in each cell before running

You will need a writeable Hugging Face hub API key to publish quantized model to your space

- Update the model card for yuor quantized version of model on Hugging Face

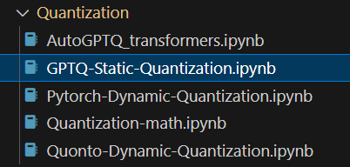

Open notebook locally

- Notebook requires GPU to run !! Use Google colab.

Google colab

- Change the runtime for notebook to T4 otherwise you will see an error !!