Project: Build fraud dataset

Objective

You work for a bank and are responsible for building an API that will be invoked to detect fraudulent credit card transactions in real time. To prove the feasibility, you decided to do a PoC. The challenge is that you do not have access to real data for compliance reasons.

In this project you will create a balanced dataset for fine-tuning an LLM for fraud detection.

Part-1: Generate the dataset in JSON format

Part-2: Convert data to JSON line format and split

Part-3: Balance the dataset

Fine-tuning: (Optional) Check out instructions on the page titled Fine-tune for fraud detection

Fraud detection feature set

In this synthetic dataset, transactions are labeled as fraudulent based on a few simplified criteria that might indicate unusual or suspicious activity in real-world scenarios. Here are the criteria used:

This dataset is for experimentation only. Fraud detection models are very effectively built with classical machine learning model. LLMs at the moment are an area of interest for this use case. In a real-world scenario, you will work with a data and business expert to identify the parameters.

-

High Transaction Amount: Large purchases, especially for luxury items like electronics or jewelry, are often flagged in fraud detection systems as they’re more likely to indicate fraudulent activity.

- Examples: Transactions over $500 (e.g., $512.34 for electronics and $1500.00 for jewelry) are labeled as “Fraud.”

-

Unusual Merchant Types: Certain types of merchants (e.g., jewelry or electronics stores) are more commonly associated with high-value, high-risk transactions, which may raise a red flag.

- Examples: Transactions at electronics or jewelry merchants, especially with high amounts, are labeled as “Fraud.”

-

Foreign Transactions: Transactions occurring in a location that differs from the user’s normal spending pattern, especially international transactions, are flagged as suspicious.

- Example: A high-value transaction from a location like Tokyo (for an online retailer) could be marked as “Fraud.”

-

Unusual Timing: Odd hours for transactions may increase the likelihood of a fraud label, especially if the transaction does not align with typical spending times.

- Example: A large transaction made late at night from a different country could suggest fraudulent activity.

-

Location: If transaction location is a country different from the customer’s country then there is a highly likelihood of fraud.

- Example: Customer’s home country is UK and there is a transaction in Russia, then high likehood of fraud

In real fraud detection, these simplified indicators would be part of a larger set of signals, including historical user behavior, spending patterns, device and network information, and real-time risk scoring to accurately determine fraud.

Part-1: Generate the dataset in JSON format

1. Design a prompt

- You will use a Chat LLM for creating the synthetic dataset, for that you will need a prompt

- Use the feature set discussed above to desing a prompt that will generate 5 records for validation

I need a synthetic dataset for credit card transactions, with details on whether each transaction is potentially fraudulent. The dataset should include diverse records with fields such as Transaction ID, Amount, Merchant Type, Location (city and country), Transaction Time, Device Type, Customer Country, Customer State, Transaction Label (Fraud or Not Fraud), and a Comment.

For each row:

- Generate a unique **Transaction ID**.

- Choose an **Amount** value, varying from low (e.g., below $100) to high (e.g., above $1,000).

- **Merchant Types** should include categories like Groceries, Electronics, Restaurants, Jewelry, and Online Retail.

- **Location** should be specific, including city and country (e.g., “New York, USA” or “Tokyo, Japan”).

- **Transaction Time** should include both date and time.

- For **Device Type**, pick from options like Mobile, Desktop, and Tablet.

- Include **Customer Country** and **Customer State**.

- Label each transaction as **Fraud** or **Not Fraud** based on the pattern of spending, geography, and amount:

- Mark a transaction as "Fraud" if it’s a high amount in a location far from the customer's usual country/state.

- Mark as "Not Fraud" for typical transactions (e.g., within usual geography, lower amounts).

- Include a **Comment** explaining the reason for the label (e.g., “unusually high amount outside customer’s region” or “typical transaction in customer’s area”).

Generate 5 example records in the following JSON format:

{

"transaction_id": "...",

"amount": ...,

"merchant_type": "...",

"location": "...",

"transaction_time": "...",

"Device Type": "...",

"customer_country": "...",

"customer_state": "...",

"transaction_label": "not_fraud | fraud",

"comment": "..."

}

Step-2 Generate data for review

- Select an LLM (e.g., ChatGPT) for synthetic data generation

- Use the prompt to generate limited data for review

- If you are NOT satisfied with the performance of the prompt, adjust it

The instructions in this project are using ChatGPT but you may use other LLMs such as Gemini, Claude as well.

Step-3 Generate synethetic data

- Instruct the LLM to generate the required number of records

- The final dataset should have a minimum of 80 examples (before splitting)

- Final data file should be in JSON format.

- Copy the data as a single JSON array under the template project folder:

[Template project]/Fine-Tuning/data/synthetic-credit-card-fraud/credit-card-fraud-chatgpt.json

- Here is an example with just 2 records:

Do not close the chat session as you will use it for generating additional examples for balancing the dataset.

You may need to carry out this step multiple times due to the LLM’s max output token restrictions. You may need to stich the rows together to create the dataset. At the end of it you should have a single JSON file with a single array.

[

{

"transaction_id": "TXN00123456",

"amount": 85.50,

"merchant_type": "Groceries",

"location": "Chicago, USA",

"transaction_time": "2024-10-28 14:32:15",

"Device Type": "Mobile",

"customer_country": "USA",

"customer_state": "IL",

"transaction_label": "not_fraud",

"comment": "Typical grocery purchase within customer's state"

},

{

"transaction_id": "TXN00123457",

"amount": 1200.75,

"merchant_type": "Jewelry",

"location": "Paris, France",

"transaction_time": "2024-10-28 09:15:42",

"Device Type": "Desktop",

"customer_country": "USA",

"customer_state": "CA",

"transaction_label": "fraud",

"comment": "High amount spent internationally, unusual for customer location"

}

]

The JSON data file is already provided in the project repository, if you prefer to move to next step without following the steps above. You may copy the file to your subfolder from the project repository from folder Fine-Tuning/data/synthetic-credit-card-fraud/credit-card-fraud-chatgpt.json to your Fine-Tuning/data/synthetic-credit-card-fraud folder

Part-2: Convert data to JSON line format and split

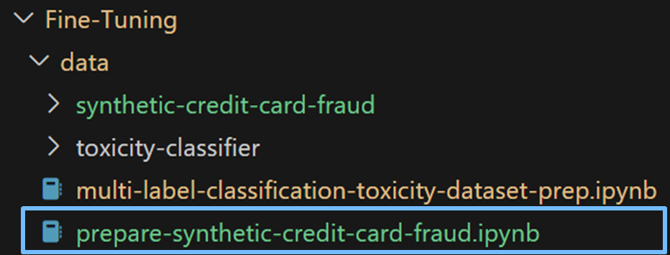

Start by creating a new notebook under the folder:

[Template project]/Fine-Tuning/data/prepare-synthetic-credit-card-fraud.ipynb

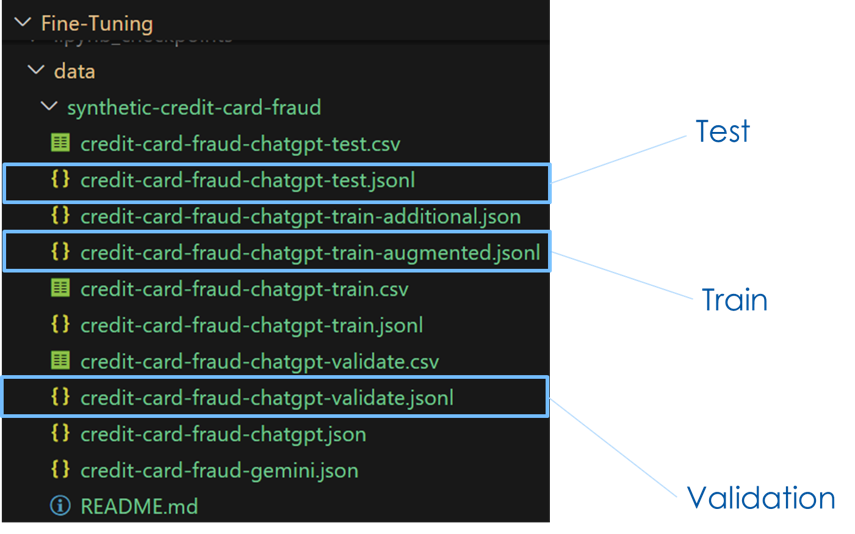

Step-1 Pre-process data to JSON line format & split

Review the code and copy/paste to your notebook. Run the code and it will generate 3 files with data in JSONL format.

- ./synthetic-credit-card-fraud/credit-card-fraud-chatgpt-train.jsonl

- ./synthetic-credit-card-fraud/credit-card-fraud-chatgpt-validate.jsonl

- ./synthetic-credit-card-fraud/credit-card-fraud-chatgpt-test.jsonl

import json

# This file has the synthetic data in JSON [ ] format

j_file = "./synthetic-credit-card-fraud/credit-card-fraud-chatgpt.json"

with open(j_file) as f:

dat = json.load(f)

# JSON array is converted to json line format

def write_jsonl_file(dat_subset, file_name):

jsonl = ""

for rec in dat_subset:

jsonl = jsonl + json.dumps(rec) + "\n"

with open(file_name, "w") as f:

f.write(jsonl)

print(file_name, "# of lines : ", len(dat_subset))

# Train - split

output_file_prefix = "./synthetic-credit-card-fraud/credit-card-fraud-chatgpt-"

file_name = output_file_prefix+"train.jsonl"

write_jsonl_file(dat[0:56], file_name)

# Validation - split

file_name = output_file_prefix+"validate.jsonl"

write_jsonl_file(dat[56:70], file_name)

# Test - split

file_name = output_file_prefix+"test.jsonl"

write_jsonl_file(dat[70:], file_name)

Part-3 Balance the dataset

Step-1 Check if training dataset needs to be balanced

At the end of this step, you will know if additional data needs to be generated for balancing the dataset.

- Get the count of fraud & not_fraud records

- Check if they are near about same

# Get the counts for fraud & not_fraud examples

training_file_name = "./synthetic-credit-card-fraud/credit-card-fraud-chatgpt-train.jsonl"

# Count fraud vs not fraud examples in the training file

def get_training_dataset_distribution(data_file_name):

fraud_count = 0

not_fraud_count = 0

with open(data_file_name) as f:

for line in f:

if json.loads(line)["transaction_label"] == "fraud":

fraud_count = fraud_count + 1

else:

not_fraud_count = not_fraud_count + 1

# Calculate % of examples labeled as Fraud

fraud_pct = int(fraud_count*100/(fraud_count + not_fraud_count))

print("Fraud labels : ", fraud_pct, "% ")

print("Not_Fraud labels : ", (100-fraud_pct), "% ")

return fraud_count, not_fraud_count

# Check the balance

fraud_count, not_fraud_count = get_training_dataset_distribution(training_file_name)

Determine the number of additional examples for a label.

# Check number of additional examples to be generated

if (fraud_count - not_fraud_count) > 0:

print("Augmentation suggested. add examples for 'Not Fraud':", (fraud_count - not_fraud_count))

elif (fraud_count - not_fraud_count) < 0:

print("Augmentation suggested. add examples for 'Fraud':", (not_fraud_count - fraud_count))

else:

print("Dataset is balanced")

Step-2 Generate suggested number of examples

- Use the earlier chat session to generate suggested number of records using ChatGPT (or other LLM)

- Save the examples to a file : credit-card-fraud-chatgpt-train-additional.json

Step-3 Balance the training dataset

- Convert the additional examples to JSON line format

- Open the training dataset for ‘append’

- Append the additional examples to the training dataset

# JSON file with additional examples

j_file_additional = './synthetic-credit-card-fraud/credit-card-fraud-chatgpt-train-additional.json'

# Open the file and read the JSON array data

with open(j_file_additional) as f:

additional_dat = json.load(f)

# Print count of additional examples for validation

print( "# of additional examples : ", len(additional_dat))

# Convert JSON array to JSON Line

jsonl = ""

for rec in additional_dat:

jsonl = jsonl + json.dumps(rec) + "\n"

# Open the credit-card-fraud-chatgpt-train.json and append the augmentation examples to it

training_file_name = "./synthetic-credit-card-fraud/credit-card-fraud-chatgpt-train.jsonl"

with open(training_file_name) as training_file:

original_training_dat = training_file.read()

output_train_file = './synthetic-credit-card-fraud/credit-card-fraud-chatgpt-train-augmented.jsonl'

with open(output_train_file, "w") as f:

f.write(original_training_dat)

f.write(jsonl)

# Check if the dataset is now balanced

get_training_dataset_distribution(output_train_file)

Solution

- Dataset files

- Notebook for splitting and balancing