Local LLM Utility

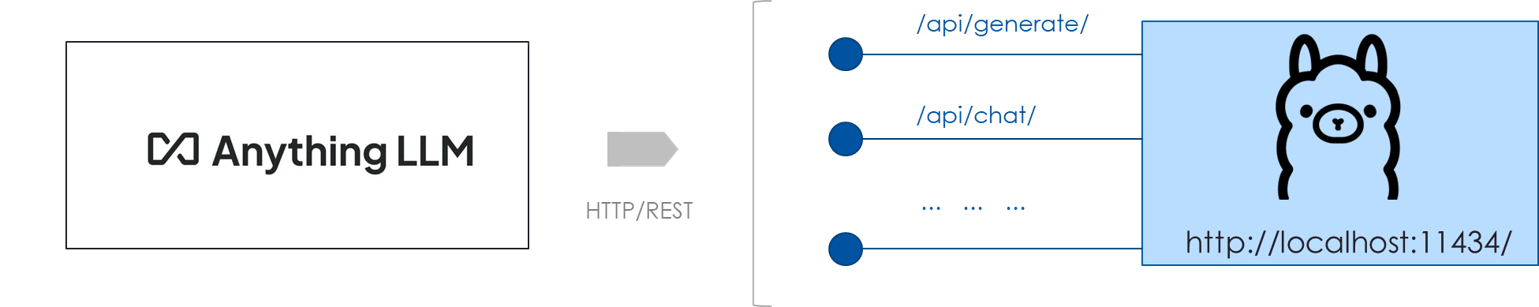

A chat user interface is one of the most common way of interacting with an LLM. These chat interfaces very commonly offer additional features such as document Q&A, There are multiple AI-UI applications that are available as open source. These applications connect to the LLM hosted over HTTP/REST. The benefit of this setup is low cost - its Free !! It just needs a decent machine to host the LLM locally and a graphical user interface to interact with the locally hosted interface. In addition, note that many of the AI-UI also offer options to connect with the remote 3rd party LLMs.

Here are a couple of AI-UI options:

Open-webui: Python package that is easy to install and use. Works with Ollama !!

GPT4All: Works with wide range of open source LLM. Very similar to ChatGPT.

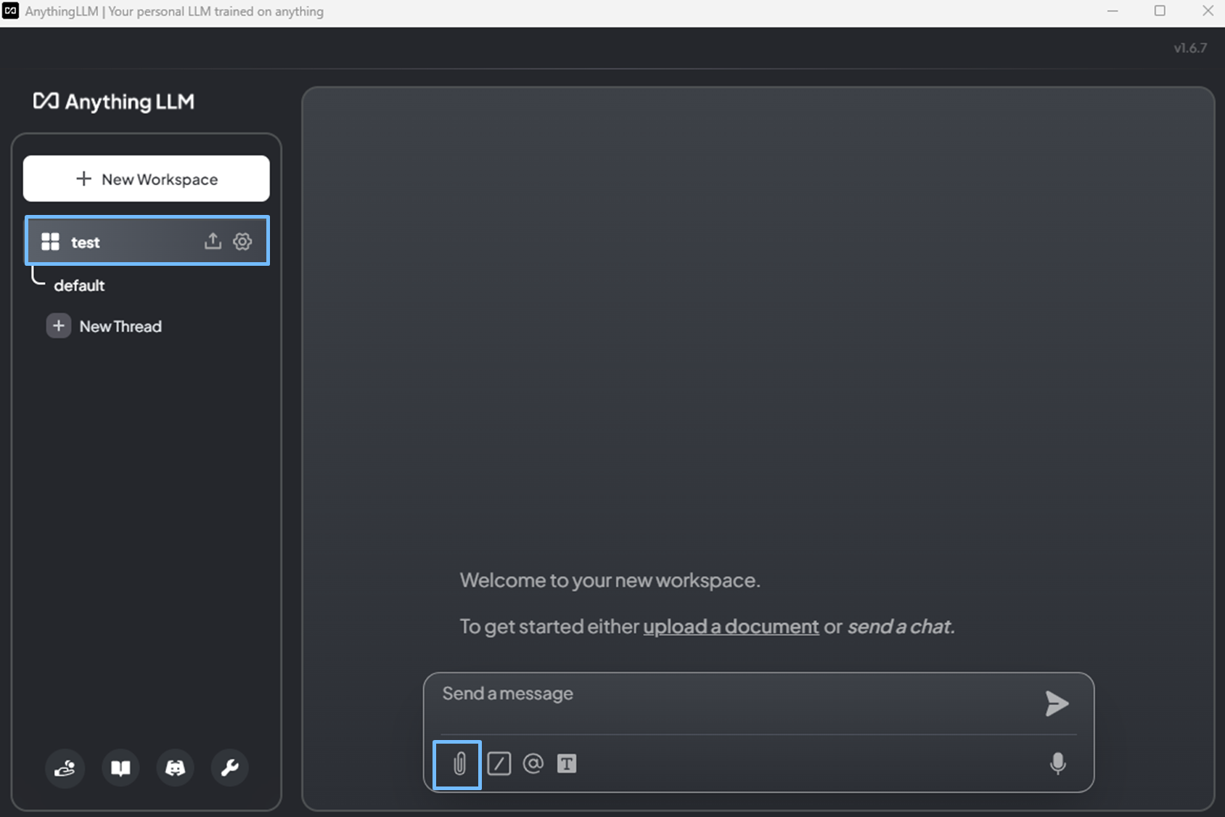

Anything LLM Desktop application. It can connect to the locally hosted LLM (Ollama) or cloud hosted LLMs.

The performance of this setup will depend on the model and resources available on your machine. Note that larger models need GPU, as a results responses from larger LLM may take longer on low power machines without GPUs.

Objective

Host open source LLM locally and use it with the generic AI-UI : AnythingLLM.

-

OLlama Allows you to host the models locally on your won machine.

-

Anything LLM A generic LLM utility application that can be used with local and remotely hosted LLM.

Setup

- Install OLlama

Download and install Ollama on your machine.

You can Watch this video to learn more about Ollama.

- Install AnythingLLM

Try it out

- Launch OLlama

Open a terminal windown on your machine. Run the command below. This will download the gemma2 model weights to the file system cache.

Watch this video to learn more about how to use other models with OLlama

ollama run gemma2

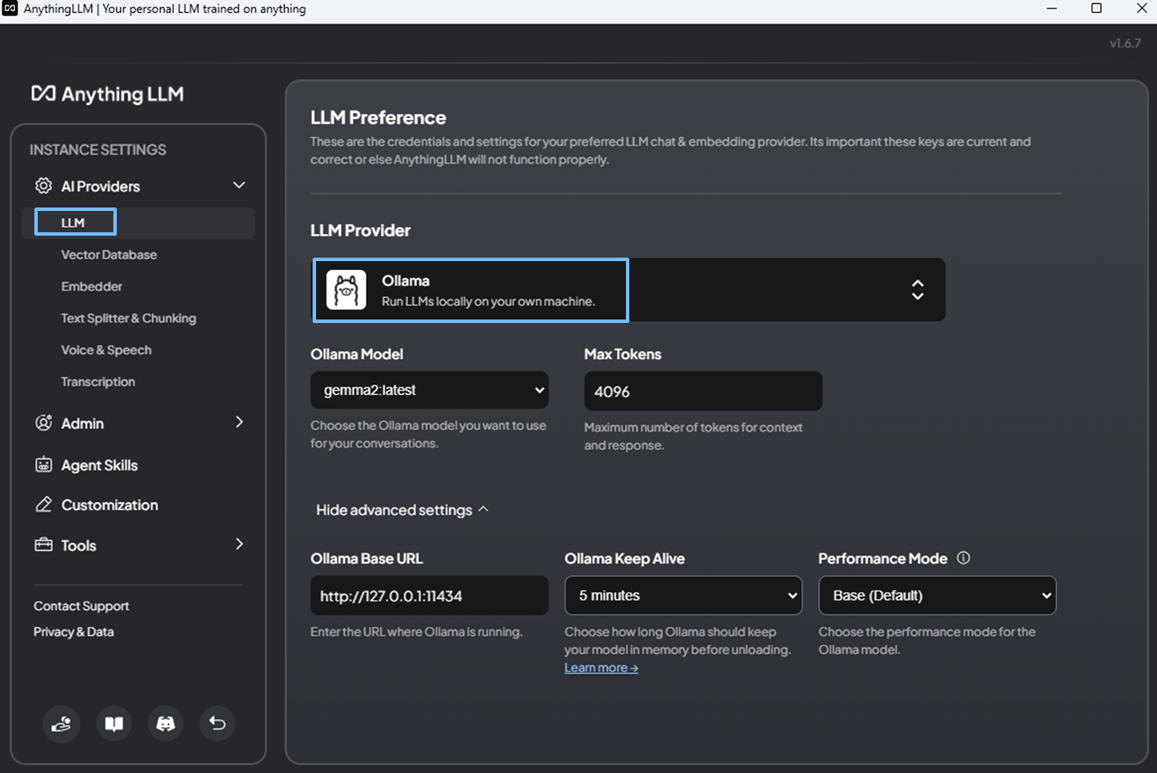

- Setup AnythingLLM to use Ollama gemma2 setup

-

Launch the AnythingLLM desktop application

-

Provide email address to register

-

Setup AnythingLLM to use local OLlama

- Click on Save changes and you are done !!!

- Create workspace

-

Think of this as a session e.g., name it ’test'

-

Interact with local LLM !!