LLM challenges

Objective

Demonstrate the common LLM challenges by way of code using HuggingFace open source models.

-

Hallucination is a commonly observed phenomenon in Large Language Models (LLM), characterized by the generation of responses that deviate from factual accuracy.

-

Dated knowledge refers to the fact that model is unaware of events that occur after its training. Some events can lead to staelness of LLM’s knowledge e.g., a model trained & released in 2023 will not be able to answer questions on olympics.

-

Missing context refers to a scenario where in the model doesn’t have the required context to answer a question that leads to hallucinations or invalid responses.

-

Biased responses. Models derive their knowledge from training data, the presence of biases in that data inherently embeds biases into the model’s parametric knowledge. These biases may manifest in diverse forms, including racial, gender, political, or religious biases. Consequently, models trained on biased datasets tend to generate biased responses.

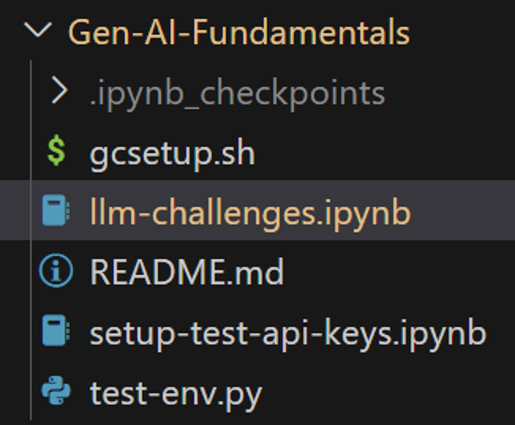

Notebook

- Notebook requires API Token for Hugging Face

- Open the notebook locally in your local Jupyter lab setup

Google colab

- Open the notebook in Google colab

- Make sure to run the required cells !!! otherwise you will get errors