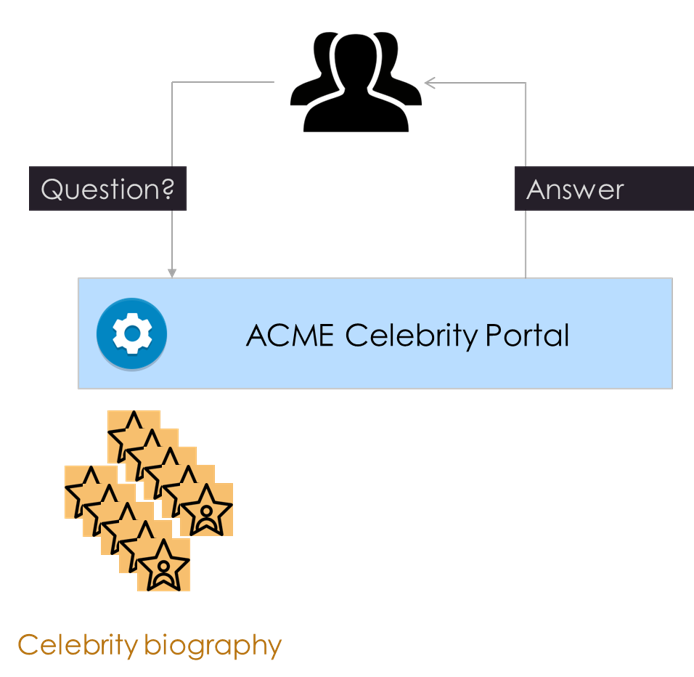

Exercise#2 Question-Answering

Objective

Your startup is building a celebrity biography portal. User of your application can ask questions about the celebrities. There are over 100,000 celebrity biographies in your database. You built this database by scraping wikidepida, IMDB and other websites. This biography data is in the free form text format.

In the next team meeting you are tasked to present a solution that can be built with minimum effort.

-

On Hugging Face identify a model that can do the task

-

Which AutoModelXXXX class would you use for this task?

- Go through the documentation to understand the parameters

- Go througt the documentation to understand the logits

-

Use the model to build a working sample in a notebook. You may use any/all of the following:

- Auto classes (preferred)

- Pipeline classes

- Inference client

Note:

Focus only on the gen-AI part, your team mate will build the website :-) with API that you will code

Sample document

For additional Q&A samples, Refer to Squad 2.0 Dataset

context = """Beyonce Giselle Knowles-Carter (/biː\ˈjɒnseɪ/ bee-YON-say) (born September 4, 1981)

is an American singer, songwriter, record producer and actress. Born and raised in Houston,

Texas, she performed in various singing and dancing competitions as a child, and rose

to fame in the late 1990s as lead singer of R&B girl-group Destiny\'s Child. Managed by her

father, Mathew Knowles, the group became one of the world\'s best-selling girl groups of

all time. Their hiatus saw the release of Beyonce\'s debut album, Dangerously in Love (2003),

which established her as a solo artist worldwide, earned five Grammy Awards and featured the

Billboard Hot 100 number-one singles \"Crazy in Love\" and \"Baby Boy."""

Sample questions

questions = [

"When did Beyonce start becoming popular?",

"When did Beyonce leave Destiny's Child and become a solo singer?",

"What areas did Beyonce compete in when she was growing up?",

"In what city and state did Beyonce grow up?",

"In what R&B group was she the lead singer?"

]

Solution

-

Which Auto Class would you use?

QA task On Hugging Face portal filter for models on the NLP task for QuestionAnswering -

Which Auto Class would you use?

AutoModelForQuestionAnswering -

What logits are in the output?

QuestionAnsweringModelOutput

Model alternatives

Factoid, Non-Factoid, Open-domain

In general, Zero-shot SOTA models work out great.

-

google/flan-t5-small

-

OpenAI/GPT-3.5-Turbo

Reading comprehension

-

distilbert-base-uncased-distilled-squad

-

aware-ai/bart-squadv2

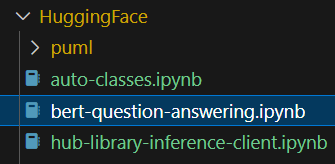

Solution Notebook in course repository

HuggingFace/bert-question-answering.ipynb

Open in Google Colab

- Must install packages before running the code cells

pip install transformers torch huggingface_hub