Exercise#2 Create & publish dataset

Objective

-

Create a dataset with ’train’ & ‘validation’ split for model pre-training experimentation

-

The total number of rows in the dataset will be miniscule part of the dataset wikimedia/wikipedia. The size of the original dataset is roughly 72 GB

-

In the original dataset there are multiple configs, each for different language. You will use just the config wikipedia/20220301.en

Steps

- Load the dataset wikipedia/20220301.en

- Create a split with 0.01% of the original data in the ’train’ split

- We will use ONLY the ’test’ split i.e., 0.01% of original data

- Pre-process : Break wiki paragraphs into sentences

- Each row in the original dataset is a large text blob (one or more paragraphs)

- Sentences from the same paragraph will have common attributes [id, url, title]

- Do NOT shuffle the dataset

- Split the dataset into [train, validation] with [90%, 10%] split

- Upload dataset to HF e.g., I pushed it to acloudfan/wikismall

Solution

Dataset on HF Hub

Checkout the final dataset at acloudfan/wikismall

Notebook

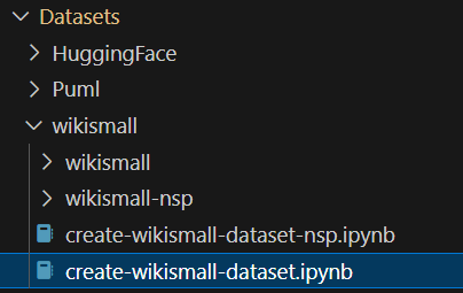

Datasets/wikismall/create-wikismall-dataset.ipynb

Google colab

- Make sure to follow instructions for setting up packages